Abstract

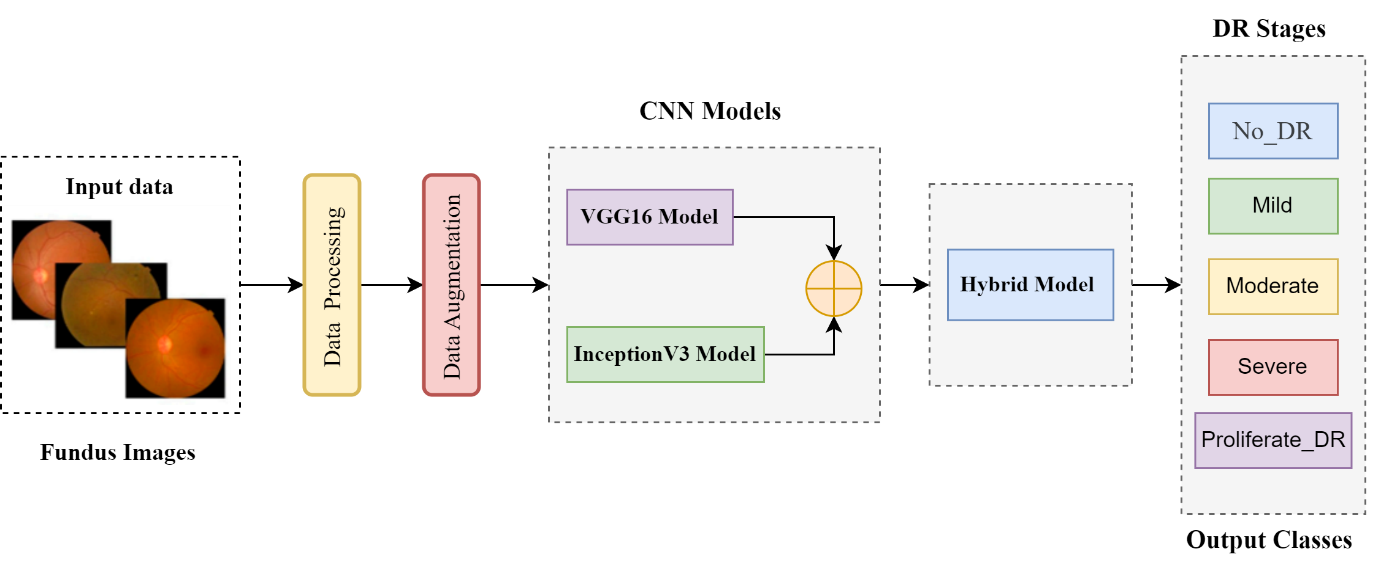

Recently, the primary reason for blindness in adults has been diabetic retinopathy (DR) disease. Therefore, there is an increasing demand for a real-time efficient classification and detection system for diabetic retinopathy (DR) to overcome fast-growing disease (DR). We introduced a novel deep hybrid model for auto-mated diabetic retinopathy (DR) disease recognition and classification. Our model leverages the power of CNN architectures: Inception V3 and VGG16 models by combining their strengths to cater to exact requirements. VGG16 model efficiently captures fine features and wide-ranging features such as textures and edges, crucial for classifying initial signs of DR. Similarly, Inception V3’s architecture is proficient at detecting multiscale patterns, providing an extensive setting for shaping the occurrence of more complex DR severity stages. Our deep hybrid model allows the extraction of various appearance features in retinal images, which can better assist the classification and detection of DR. Our proposed model evaluated on diverse datasets, including EyePACS1 and APTOS2019, demonstrating confident performance of 99.63% accuracy in classifying the DR severity levels on EyePACS1 dataset, while 98.70% accuracy on the APTOS2019 dataset, indicating that our proposed deep hybrid model well distinguished different stages and highly efficient in DR detection. This model helps clinicians and medical experts to classify and identify diabetic retinopathy DR stages and severity levels early. This automatic system helps to manage and treat the patient more effectively and introduces timely treatment.

Keywords

retinal images

diabetic retinopathy (DR)

deep hybrid model

Inception V3

VGG16

Funding

This work was supported without any funding.

Cite This Article

APA Style

Shazia, A., Dahri, F. H., Ali, A., Adnan, M., Laghari, A. A., & Nawaz, T. (2024). Automated Early Diabetic Retinopathy Detection Using a Deep Hybrid Model. IECE Transactions on Emerging Topics in Artificial Intelligence, 1(1), 71–83. https://doi.org/10.62762/TETAI.2024.305743

Publisher's Note

IECE stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

IECE or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue