Abstract

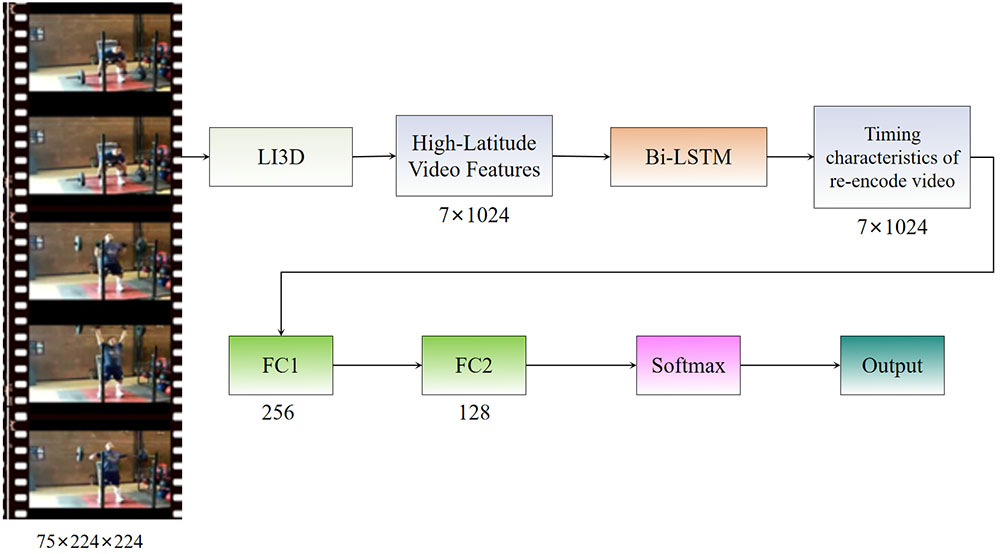

This paper proposes an improved video action recognition method, primarily consisting of three key components. Firstly, in the data preprocessing stage, we developed multi-temporal scale video frame extraction and multi-spatial scale video cropping techniques to enhance content information and standardize input formats. Secondly, we propose a lightweight Inception-3D networks (LI3D) network structure for spatio-temporal feature extraction and design a soft-association feature aggregation module to improve the recognition accuracy of key actions in videos. Lastly, we employ a bidirectional LSTM network to contextualize the feature sequences extracted by LI3D, enhancing the representation capability for temporal data. To improve the model’s robustness and generalization ability, we introduced spatial and temporal scale data augmentation techniques in the preprocessing stage, effectively extracting video key frames and capturing key regional actions. Furthermore, we conducted an in-depth study on spatio-temporal feature extraction methods for video data, effectively extracting spatial and temporal information through the LI3D network and transfer learning. Experimental results demonstrate that the proposed method achieves significant performance improvements in video action recognition tasks, providing new insights and approaches for research in related fields.

Keywords

video action recognition

multi-scale preprocessing

lightweight I3D (LI3D)

spatio-temporal feature extraction

bidirectional LSTM

Funding

This work was supported without any funding.

Cite This Article

APA Style

Wang, F., Jin, X., & Yi, S. (2024). LI3D-BiLSTM: A Lightweight Inception-3D Networks with BiLSTM for Video Action Recognition. IECE Transactions on Emerging Topics in Artificial Intelligence, 1(1), 58–70. https://doi.org/10.62762/TETAI.2024.628205

Publisher's Note

IECE stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Institute of Emerging and Computer Engineers (IECE) or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue