Abstract

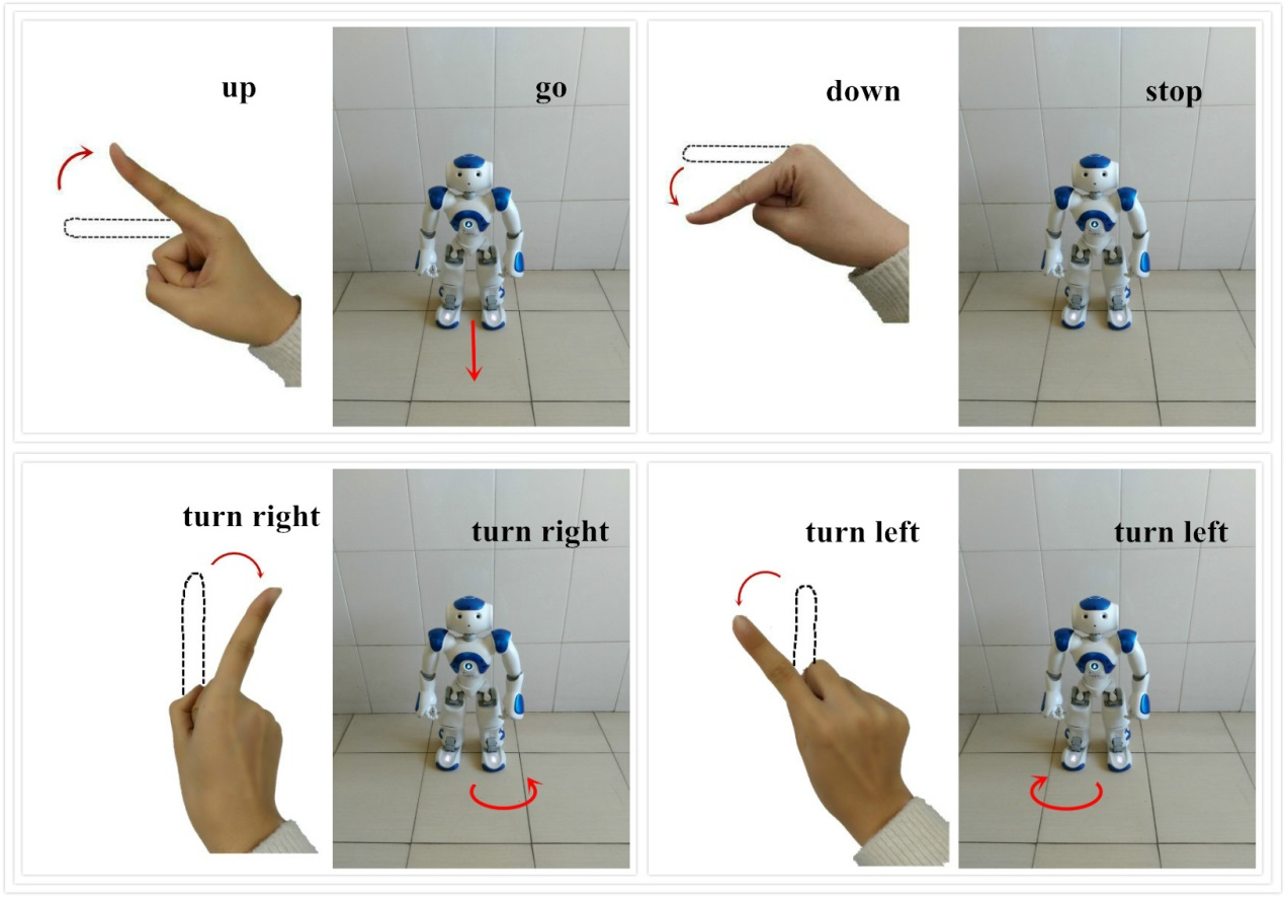

Humanoid robots have much weight in many fields. Their efficient and intuitive control input is critically important and, in many cases, requires remote operation. In this paper, we investigate the potential advantages of inertial sensors as a key element of command signal generation for humanoid robot control systems. The goal is to use inertial sensors to detect precisely when the user is moving which enables precise control commands. The finger gestures are initially captured as signals coming from the inertial sensor. Movement commands are extracted from these signals using filtering and recognition. These commands are subsequently translated into robot movements according to the attitude angle of the inertial sensor. The accuracy and effectiveness of the finger movements using this method are experimentally demonstrated. The implementation of inertial sensors for gesture recognition simplifies the process of sending control inputs, paving the way for more user-friendly and efficient interfaces in humanoid robot operations. This approach not only enhances the precision of control commands but also significantly improves the practicality of deploying humanoid robots in real-world scenarios.

Funding

This work was supported without any funding.

Cite This Article

APA Style

Xie, J., Na, X., & Yi, S. (2024). Enhanced Recognition for Finger Gesture-Based Control in Humanoid Robots Using Inertial Sensors. IECE Transactions on Sensing, Communication, and Control, 1(2), 89–100. https://doi.org/10.62762/TSCC.2024.805710

Publisher's Note

IECE stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Institute of Emerging and Computer Engineers (IECE) or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue