Abstract

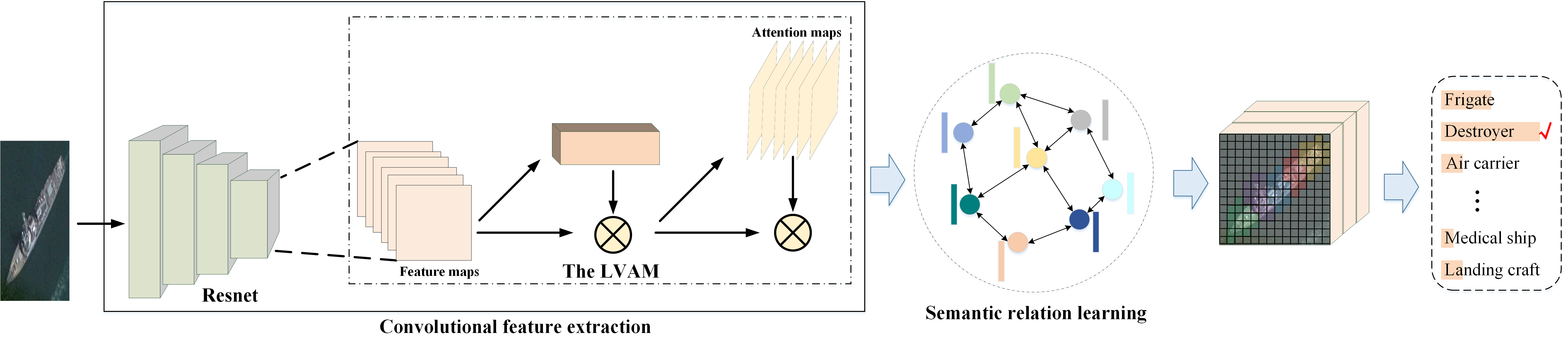

Remote sensing image plays an important role in maritime surveillance, and as a result there is increasingly becoming a prominent focus on the detection and recognition of maritime objects. However, most existing studies in remote sensing image classification pay more attention on the performance of model, thus neglecting the transparency and explainability in it. To address the issue, an explainable classification method based on graph network is proposed in the present study, which seeks to make use of the relationship between objects' regions to infer the category information. First, the local visual attention module is designed to focus on different but important regions of the object. Then, graph network is used to explore the underlying relationships between them and further to get the discriminative feature. Finally, the loss function is constructed to provide a supervision signal to explicitly guide the attention maps and overall learning process of the model. Through these designs, the model could not only utilize the underlying relationships between regions but also provide explainable visual attention for people's understanding. Rigorous experiments on two public fine-grained ship classification datasets indicate that the classification performance and explainable ability of the designed method is highly competitive.

Keywords

Explainable visual feature

remote sensing image

ship classification

Funding

This work was supported without any funding.

Cite This Article

APA Style

Li, H., Xiong, W., Cui, Y., & Xiong, Z. (2024). Explainable Classification of Remote Sensing Ship Images Based on Graph Network. Chinese Journal of Information Fusion, 1(2), 126–133. https://doi.org/10.62762/CJIF.2024.932552

Publisher's Note

IECE stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Institute of Emerging and Computer Engineers (IECE) or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue