Abstract

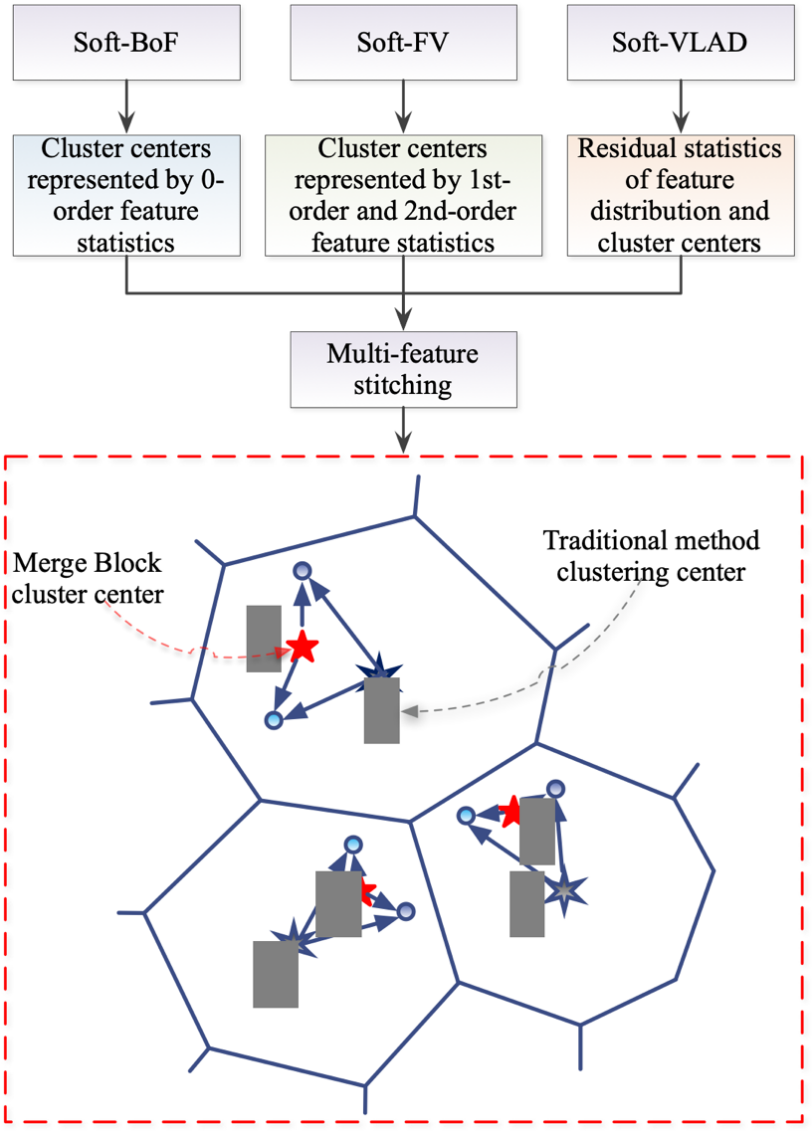

The efficient extraction and fusion of video features to accurately identify complex and similar actions has consistently remained a significant research endeavor in the field of video action recognition. While adept at feature extraction, prevailing methodologies for video action recognition frequently exhibit suboptimal performance in the context of complex scenes and similar actions. This shortcoming arises primarily from their reliance on uni-dimensional feature extraction, thereby overlooking the interrelations among features and the significance of multi-dimensional fusion. To address this issue, this paper introduces an innovative framework predicated upon a soft correlation strategy aimed at augmenting the representational capacity of features by implementing multi-level, multi-dimensional feature aggregation and concatenating the temporal features produced by the network. Our end-to-end multi-feature encoding soft correlation concatenation aggregation layer, situated at the temporal feature output terminal of the Video Action Recognition network, proficiently aggregates and integrates the output temporal features. This approach culminates in producing a composite feature that cohesively unifies multi-dimensional information, markedly enhancing the network's competency in differentiating analogous video actions. Empirical findings demonstrate that the approach delineated in this paper bolsters the efficacy of video action recognition networks, achieving a more thorough depiction of images, and yielding superior accuracy and robustness.

Keywords

video action recognition

soft correlation

spatio-temporal feature extraction

concatenation aggregation structure

bidirectional LSTM

Funding

This work was supported without any funding.

Cite This Article

APA Style

Wang, F., & Yi, S. (2024). Spatio-temporal Feature Soft Correlation Concatenation Aggregation Structure for Video Action Recognition Networks. IECE Transactions on Sensing, Communication, and Control, 1(1), 60–71. https://doi.org/10.62762/TSCC.2024.212751

Publisher's Note

IECE stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Institute of Emerging and Computer Engineers (IECE) or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue