Abstract

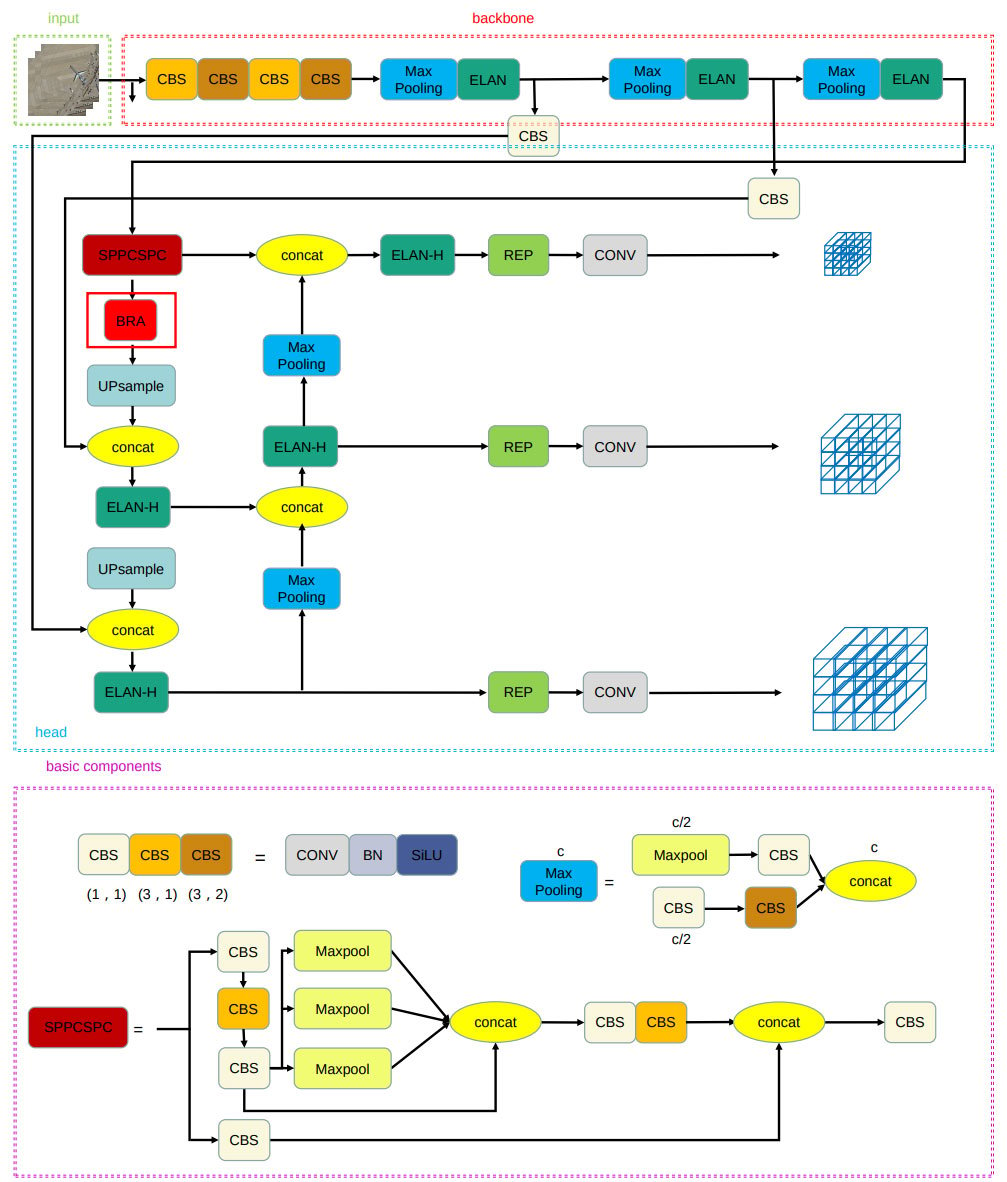

With the progressive advancement of remote sensing image technology, its application in the agricultural domain is becoming increasingly prevalent. Both cultivation and transportation processes can greatly benefit from utilizing remote sensing images to ensure adequate food supply. However, such images often exist in harsh environments with many gaps and dense distribution, which poses major challenges to traditional target detection methods. The frequent missed detections and inaccurate bounding boxes severely constrain the further analysis and application of remote sensing images within the agricultural sector. This study presents an enhanced version of the YOLO algorithm, specifically tailored to achieve high-efficiency detection of densely distributed small targets in remote sensing images. We replaced the convolutions with a convolution kernel size of 3 in the last two ELAN modules with DeformableConvNetsv2 so that the backbone can better extract various objects. The proposed detector introduces a Bi-level Routing Attention module to the pooled pyramid SPPCSPC network of YOLOv7, thereby intensifying the attention towards areas of target concentration and augmenting the network's capacity to extract features related to dense small targets through effective feature fusion. Additionally, our approach employs a dynamic non-monotonic WIoUv3 to ensure the loss function of the network, enabling the allocation of the most appropriate gradient gain strategy at each instant and enhancing the network's ability to focus on detecting targets accurately. Finally, through comparative experimentation on the DIOR remote sensing image dataset, our proposed YOLOv7-bw exhibits superior performance with higher

[email protected] and

[email protected]: 0.95, achieving detection rates of 85.63\% and 65.93\%, surpassing those of the YOLOv7 detector by 1.93% and 2.03%, respectively, thus substantiating the effectiveness of our algorithmic approach.

Keywords

remote sensing image

small object detection

harsh food supply management

deep learning

YOLOv7 architecture

Data Availability Statement

Data will be made available on request.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 62173007, Grant 62203020, Grant 62473008, Grant 62433002, and Grant 62476014; in part by the Beijing Nova Program under Grant 20240484710; in part by the Project of Humanities and Social Sciences (Ministry of Education in China, MOC) under Grant 22YJCZH006; in part by the Beijing Scholars Program under Grant 099; in part by the Project of ALL China Federation of Supply and Marketing Cooperatives under Grant 202407; in part by the Project of Beijing Municipal University Teacher Team Construction Support Plan under Grant BPHR20220104.

Conflicts of Interest

The authors declare no conflicts of interest.

Ethical Approval and Consent to Participate

Not applicable.

Cite This Article

APA Style

Jin, X., Fu, H., Kong, J., Ma, H., Bai, Y., & Su, T. (2025). A Deep-Learning Detector via Optimized YOLOv7-bw Architecture for Dense Small Remote-Sensing Targets in Harsh Food Supply Applications. Chinese Journal of Information Fusion, 2(1), 38–58. https://doi.org/10.62762/CJIF.2025.919344

Publisher's Note

IECE stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Copyright © 2025 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (

https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Submit Manuscript

Edit a Special Issue

Submit Manuscript

Edit a Special Issue

Copyright © 2025 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

Copyright © 2025 by the Author(s). Published by Institute of Emerging and Computer Engineers. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made.